Alpha testing tips to help you beat the bugs

Jason Kaye, Senior Content Writer, Adjust, Apr 05, 2024.

In a world with high expectations and little patience, the practice of testing something before announcing it to the mainstream can only lead to a more positive reception. When it comes to apps, programs, and other software, the start of this process is called alpha testing.

What is alpha testing?

Alpha testing is a stage in software development that aims to identify bugs and issues before releasing an app, mobile game or program to a wider audience.

Why is it called alpha testing?

The stage is called alpha testing because it comes first—just like its namesake being the first letter of the Greek alphabet. It marks the first overall end-to-end test of a product in development.

‘Alpha’ can also refer to something that’s either in the early stages of development, or incomplete due to bugs and technical issues. For example, you might read about a new mobile app being in its alpha stage or in alpha testing—essentially, this means that it’s not ready for public testing or viewing, and that creases are still being ironed out before its launch into an app store.

Who’s involved in alpha testing?

The alpha testing process is usually kept within a small group of people, all closely linked to the project. Testers are usually categorized as white box and black box.

White box testers are technical, usually the app developers, and have the best knowledge and understanding of the code used to build the software, and—more crucially—know how the product should function.

Black box testers are non-technical and offer insights into more real-world scenarios and user experience. They can be internal workers outside of the development team, or a select group of your audience given early access in return for their testing time and feedback.

How is alpha testing different to beta testing?

Alpha testing is executed in-house, while beta testing opens the floor up to end-users. In alpha testing, white box testers examine the app's core functionality, stability and initial user experience, with the help of some black box testers. Beta testing is fully black-box, and sees users interact with the app in genuine scenarios, providing invaluable insights into their preferences and feelings.

Learn more about beta testing and how to implement a well executed beta test.

Why is alpha testing important?

In competitive markets like the world of mobile apps, offering a flawless, high-quality product is a must. With up to 80% of users uninstalling apps due to long load times—including waiting for updates to fix crashes and bugs—testing software prior to public release just makes sense.

Almost 63% of app downloads start with a random search, and if you’re launching a new app and are yet to establish brand loyalty, you’ll want to ensure you’re at least offering the best user experience from the start, i.e. an app that doesn’t crash, frustrate users or drain phone batteries.

Alpha testing provides a safety net that allows developers to find and fix problems early on, leading to a better quality release, avoiding teething problems after launch, and actively working to reduce churn.

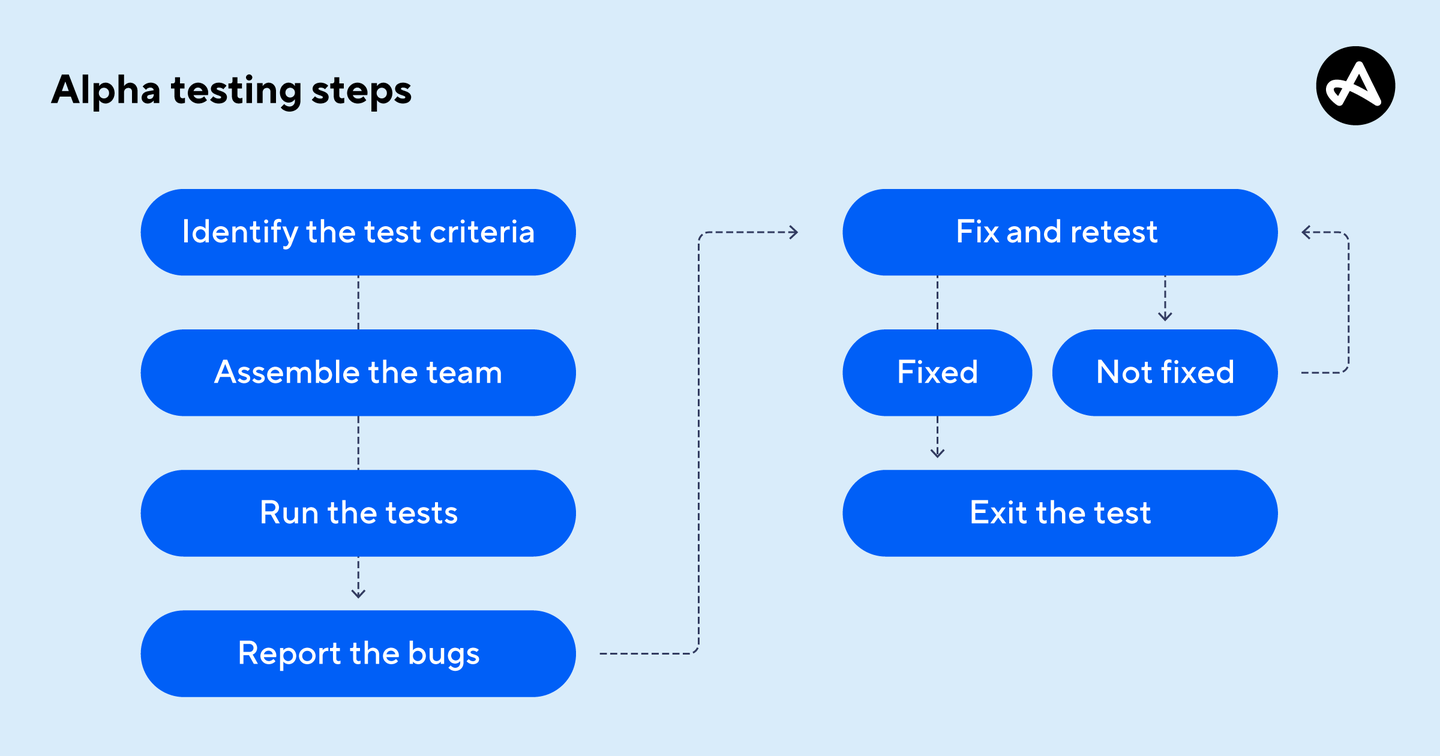

How to run an alpha test

A successful alpha test isn’t necessarily one that’s quick, with nothing to fix and minimal follow up. Remember, the whole point of this stage in software development is to avoid releasing a product that’s full of bugs and disappoints end users. Given that, it’s better to allow more time to catch every issue than to go for a quick release that results in crashes and user attrition.

The exact tests to carry out depend on the software you’re planning to release. For example, a new food tracking app will have different functionality to a mobile game update—the type of mobile game could then further refine the testing phase. No matter the software you’re building, there are a few constants when it comes to alpha testing, so here’s what should be on your to-do list at a minimum:

Identify the alpha testing criteria

During alpha testing, core functionalities, user experience, and performance should all be under the microscope. Make it clear to everyone involved what it is you’re testing, and what the perfect run through looks like. This perfect run through will become your exit criteria—a clear set of benchmarks defining what the go-to-market version looks like, and what would block the launch. Some of the exit criteria will be documented during the testing process as and when bugs are uncovered.

To get started with your testing and exit criteria, think about:

Core functionality

Ask yourself what users should be able to do. List all functionalities and actions, and create test cases to verify functionality (what are the possible outcomes of each action?).

Example: New user registers and logs in.

Test cases: New user registers successfully; new user is already known and must remember or reset their details; new user logs in successfully.

Stability

Determine the acceptable performance level for your app or software (think about load time, crashes triggered by specific events, and incorrect responses).

Example: Battery life should not increase by more than 10% per hour of use; total app launch time should not exceed 5 seconds on a particular device.

Test case: Monitor battery and software performance over set times.

User experience

Analyze all prompts and actions for clarity and inclusiveness. Consider thought patterns and onward journeys that users might take—as well as those you want them to take.

Example: Is the journey from login to gameplay quick and logical; is it clear how and where users make in-app purchases?

Test case: Consider blind testing features and functions among the team to see what the expectations of key interactions are compared to the reality. Ensure the time taken per task reflects the reward or output a user receives.

Mobile app testing can expand beyond the above core criteria. You might also want to consider tests focusing on:

- Security, including data storage and user authentication

- Cross-device and cross-operating system compatibility, to establish minimum device and software requirements

- Translation and localization, to adapt user interfaces due to language changes

- Accessibility and diversity, to identify barriers to interaction

- Analytics integration, to monitor user patterns and identify drop offs and churn

- Crash reporting mechanisms, to enable crash reports to be sent even after launch

Assemble the team and test

Once you’ve set the test criteria, you can brief your teams about the part they’ll play. Small teams will result in more focused and efficient testing, but things can get missed if only a few people are involved. Large teams offer greater coverage, but can be costly, and with more people come challenges in communication.

Strike a balance with 10-12 testers—this is usually enough for most app alpha tests, but make sure technical (white box) and non-technical (black box) testers are involved in order to get a balanced perspective. For example, sales teams and customer relationship managers (CRMs) will be aware of existing customer pain points, and will have spent time understanding and analyzing competitor offerings, so their insight at this stage can save you time and money later down the release line.

Report the bugs

To avoid missing any bugs or errors in your app’s development, set up a reporting document to capture all conversation around what the alpha testing unearths. Don’t rely on email chains or instant messengers (unless you have a dedicated inbox or channel), as tracking can become difficult, and some issues may be connected but discovered at different times by different testers. A shared spreadsheet will do, as long as you’re able to keep a record of:

- The exact issue

- The priority level of the issue

- The steps to reproduce the issue

- The result vs. the expectation

- The solution

- The status

- Supporting notes and attachments

Fix and retest

The penultimate stage in alpha testing focuses on addressing the issues that surfaced in the previous bug reporting stage—this is where your shared spreadsheet comes into play. Once this bug report is handed back to the developers, they’ll get to work diagnosing the problems and putting code fixes in place.

Prioritizing the fixes is key, particularly if time is against you. Some fixes will be mandatory and others will be nice to have. For example, a tester may suggest a faster loading transition, but compared to a broken onboarding journey, it’s less of a go-to-market blocker.

Once the developers are happy that all bugs have been rectified, they’ll retest to ensure the amended code doesn’t impact anything else in the software, before giving the initial testers the opportunity to repeat their actions—hopefully without any unexpected events. This is often an iterative process, and developers may need to attempt fixes to the same bug multiple times, depending on how each new code release is received.

Exit the alpha testing stage

When the software is running in a clean state, free of any bugs, it’s ready to leave the alpha testing stage. At this point, the exit criteria should be revisited (remember the benchmarks mentioned at the start?).

The final stage of alpha testing isn’t the last test phase, but it is a huge milestone to get to. Next up is beta testing, a test stage performed with a group of your target users, aiming to gather real-world feedback on performance, usability, and possible issues, providing valuable insights for final refinements before public launch.

Alpha testing can be the difference between a flawless app release that attracts, engages and retains an audience, and one that crashes upon login, doesn’t accurately track progress, and ends up being uninstalled. Get the alpha testing stage right and you’ll carve out a clean path towards a successful app release.

To learn how to measure your app’s success, and how users interact with your product once live, request a demo with Adjust today.

Be the first to know. Subscribe for monthly app insights.